E-Stop

By Ben Nitkin on

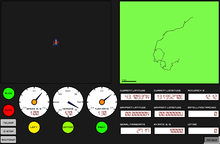

You probably know I'm working on building an autonomous robot as a senior project by now. (Psst. If not, read about it. Here.)

Now, this robot is driven by a pair of horsepower motors. Given full throttle, it'll easily hit 30mph. Even with safeties baked into the autonav code and Arduino motor driver, we need emergency stops. In fact, we have three. One's implemented in the packet radio: we've defined a code that will immediately kill the robot. A button on the robot will cut power to the main relay. The third E-Stop is a hardware radio E-Stop. That's the most interesting one, and I'm going to talk a bit about how it's designed. And since you're such great listeners, you'll listen. Thanks!

The radio E-Stop comes has a few requirements. I'm going to put them in a list, since I just realized that breaking up text makes it easier to read. It must:

- not use any software (microcontrollers are presumably banned).

- have a range exceeding 100'.

- bring the robot to a "quick and complete stop".

- be held by the judges during competition.

Okay. The last 'requirement' isn't technical, but it requires the E-Stop to be portable.

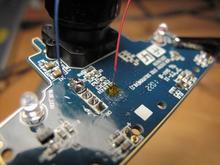

Given those requirements, I started putting together details about the e-stop. It needed a radio good to 100', so I found a cheap transmitter/receiver pair on Sparkfun. They're friendly in that they're easy to use, but that suggests a problem: what if someone else at the competition uses the same radio? We clearly needed some way to distinguish our E-stop from potential random noise. But it can't be too complicated; we're on a deadline and can't use software to distinguish long patterns.